home | portfolio | publications

Portfolio

This is a selection of past and ongoing projects, in reverse chronological order.

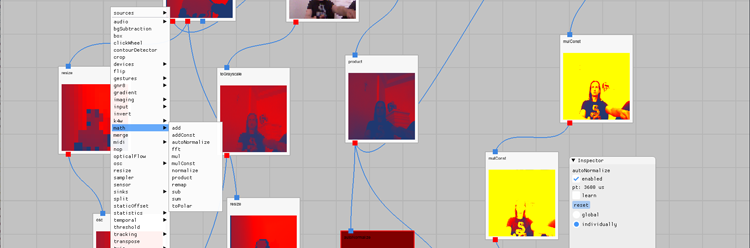

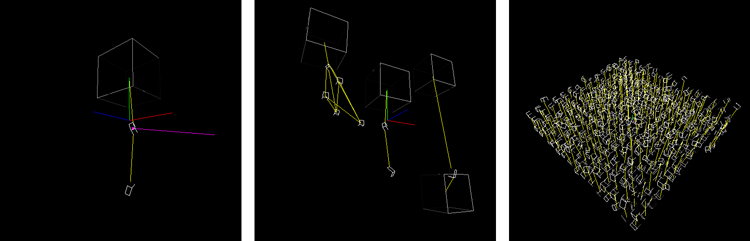

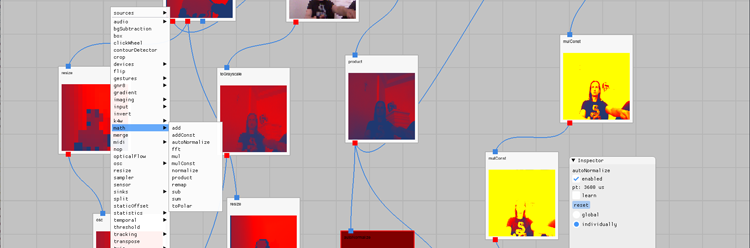

sqıd: a Visual Programming Environment (2020 – 2025)

sqıd is a multi-purpose visual programming tool providing an easy to use and easy to extend solution for data processing and data-flow programming. It is built to be lightweight and supports numerous input devices and networking protocols with a heavy focus on research tasks in HCI, UbiComp, and IoT contexts. more...

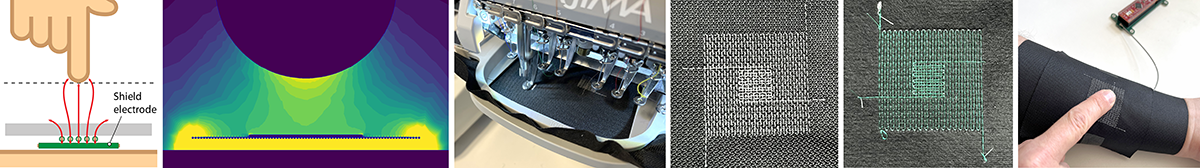

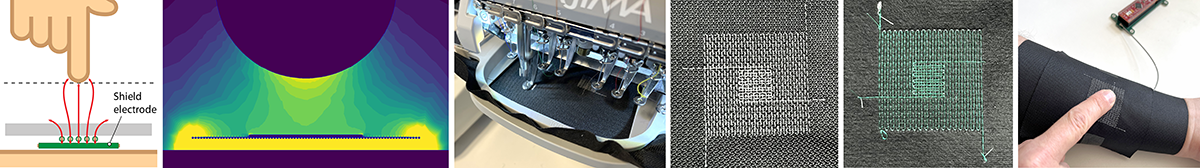

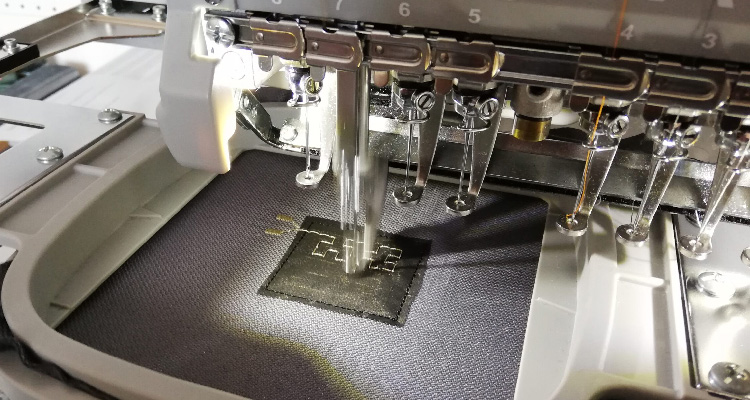

Embroidered Driven Shields (2025)

We implement driven shielding on a textile capacitive touch sensor, considerably improving its signal quality by guarding it from parasitic capacitance. Driven shields are well-established for traditional printed sensors, however, we are the first to translate this technique to the realm of textiles, by a single-step process using machine embroidery with an enameled copper wire as a bobbin thread. more...

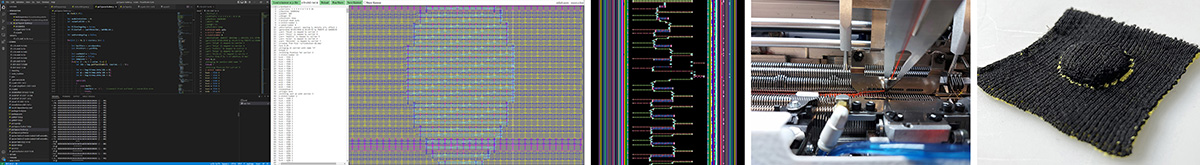

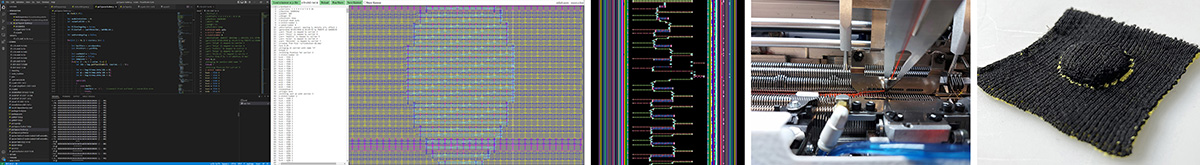

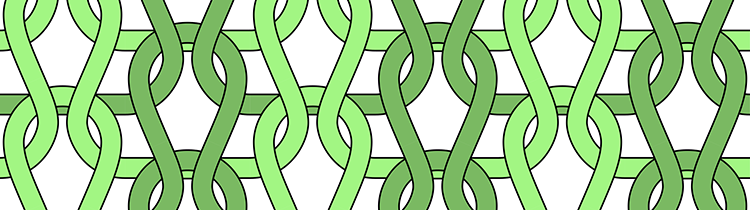

Generative Knitting (2021 – 2025)

Knitting hardware and software are highly optimized for mass-production and require considerable operating experience and training. We develop tools and scripts for simplifying access to knitting technology for novices, DIY tinkerers, and HCI researchers that come from an CS area, like ourselves. Building on-top of pre-existing platforms, we focus on generating knit that can serve as UI devices. more...

Loopsense (2024)

credit: University of Applied Sciences Upper Austria

credit: University of Applied Sciences Upper Austria

With Loopsense, we present a knitted resistive force sensor cell that may consist of only a single stitch and can be easily concealed in a variety of knitted designs. A major novelty is that it is furthermore possible to discern between actuation type: we demonstrate methods of distinguishing between horizontal and vertical strain direction as well as pressure. more...

Twill-Based Force Sensors (2023)

credit: University of Applied Sciences Upper Austria

credit: University of Applied Sciences Upper Austria

Together with knitting expert STOLL, we investigated optimizations of knitted force/strain sensors by adjusting structural properties and by adding Lycra to the substrate yarn. In contrast to related work, we experimented with Twill-structures, which provides superior stability, when compared to Plain, Double Jersey, or Rib structures. more...

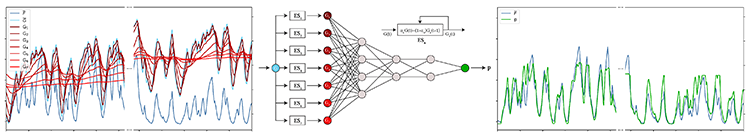

AI x Textile Sensing (2023)

credit: University of Applied Sciences Upper Austria

credit: University of Applied Sciences Upper Austria

In this work we address inconsistencies in raw sensor readings of knitted resistive sensors. We mitigate unfavorable effects such as hysteresis, offset, and long-term drift by employing a low-scale and therefore computationally inexpensive Artificial Neural Network (ANN) in combination with straightforward data pre-processing. more...

spaceR (2022)

credit: University of Applied Sciences Upper Austria

credit: University of Applied Sciences Upper Austria

spaceR is a design for knitted tactile UI elements, that are soft, compressible, and stretchable. They sense pressure with high sensitivity and responsiveness, can be operated eyes-free, and provide non-binary, (i.e., continuous) sensing. Using a regular flatbad knitting machine, the multi-component elements are fabricated ready-made, without requiring any postprocessing. more...

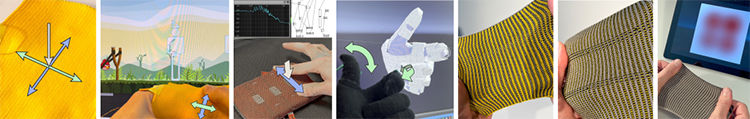

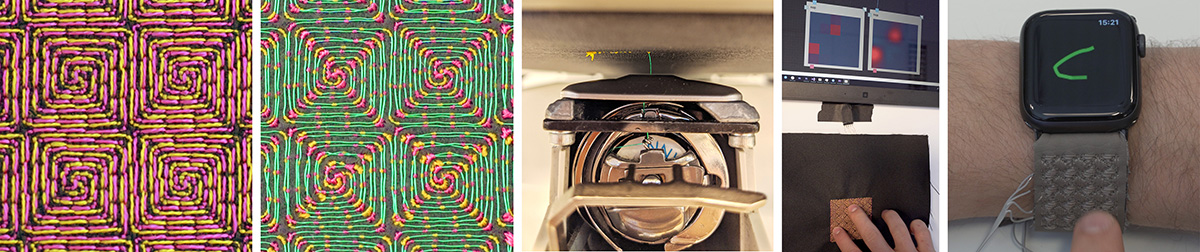

TexYZ: 3DOF Textile Multitouch (2021)

credit: University of Applied Sciences Upper Austria

credit: University of Applied Sciences Upper Austria

TexYZ is the world’s first textile capacitive multitouch sensing matrix, fabricated with a commodity embroidery machine. It has an appealing haptic quality and can act as a visual cue for interaction. TexYZ can handle multitouch without showing ghosting effects, which is a considerable limitation of previous textile touchpads. more...

Embroidered FSR Sensors (2020)

credit: University of Applied Sciences Upper Austria

credit: University of Applied Sciences Upper Austria

This work presents pressure sensors made of 100% textile components. They are easy to operate, since the sensing principle is similar to common FSR (Force-Sensing Resistor) sensors, however while FSRs require rather high actuation forces, these textile patches are highly sensitive. The sensors can be rapidly applied to numerous pre-existing fabrics, in arbitrary shape and scale. more...

[Non-Disclosed VW Project #3] (2020 –2023)

Within the FFG funded TextileUX research project, we created prototypes for Volkswagen Future Centre Europe, Potsdam. Currently non-disclosed, more details to follow.

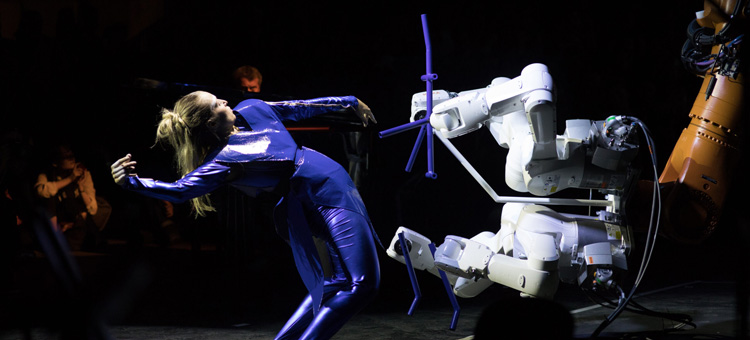

Underbody (2019)

credit: Tom Mesic

credit: Tom Mesic

Underbody is a 15-minute dance performance, premiered at the Big Concert Night of the Ars Electronica Festival 2019.

more...

[Non-Disclosed KTM Project] (2019 –2021)

Within the FFG funded TextileUX research project, we created prototypes for KTM E-Technologies. Currently non-disclosed, more details to follow.

[Non-Disclosed BMW Project #2] (2019 – 2020)

Within the FFG funded TextileUX research project, we created prototypes for the BMW Forschungs- und Innovationszentrum (FIZ), Munich. Currently non-disclosed, more details to follow.

Textile UX (2018 –2023)

credit: University of Applied Sciences Upper Austria

credit: University of Applied Sciences Upper Austria

Together with partners form industry (BMW, VW, KTM, ...) and academics (JKU SoMaP, University for Arts and Design Linz, TU Dresden, ...), we worked on novel user interfaces for textiles. The FFG funded 4-year research project TextileUX investigates interaction methods (input + output) for textile based interfaces, with the goal of seamlessly integrating computational environments into our everyday surrounding.

more...

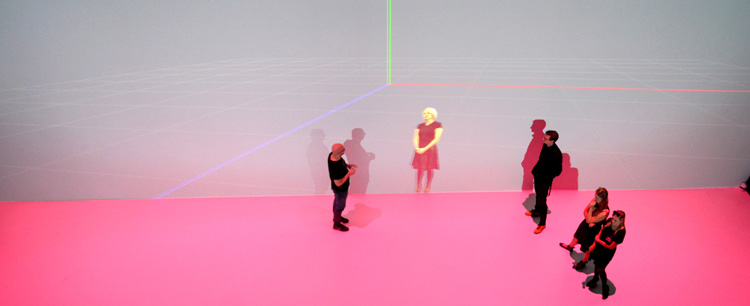

SAP Inspiration Pavillion VR Showcase (2018)

credit: SAP SE

credit: SAP SE

The SAP Pavillion at the SAP Headquater in Walldorf confronts visitors with presentations and interactive installations dealing with the software giant’s history, business, and subject area.

more...

Rotax Max Dome (2018)

credit: Vanessa Graf

credit: Vanessa Graf

The Rotax Max Dome e-kart racing track blends digital gaming worlds with long-term competition and team play elements. The experience is distributed between (partly virtual) racetrack and additional game rooms, akin to popular escape room settings and makes use of high-tech elements, such as projected spatial Augmented Reality.

more...

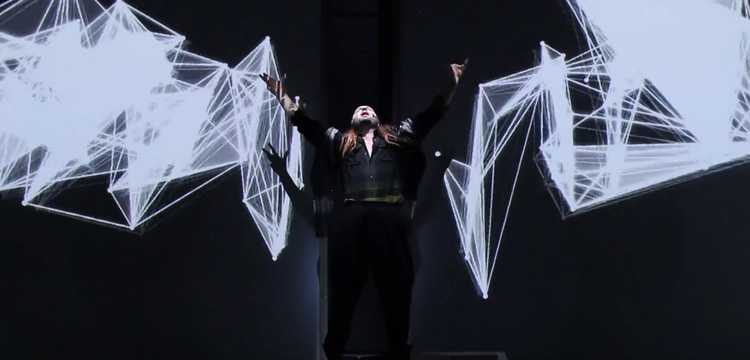

David Bowie’s Lazarus, Music theater Linz (2018)

credit: OÖ Theater und Orchester GmbH

credit: OÖ Theater und Orchester GmbH

Johannes von Matuschka’s interpretation of Lazarus represents a substantial innovation within the fields of musical with its unconventional and distinctive use of space and stage, combined with omni-present projected media augmentations, both pre-produced and interactive.

more...

NTT Swarm Arena (2017 – 2018)

credit: Tom Mesic

credit: Tom Mesic

At the Japanese telecommunication giant NTT’s 2018 R&D Forum in Tokyo, the demo Swarm Arena made use of seven LED-display equipped ground bots an five quadcopters featuring single LED emitters for a choreographed showcase of a novel display methodology for artistic expression, with special focus on sports events.

more...

Immersify (2017 – 2018)

credit: Ars Electronica

credit: Ars Electronica

The EU-funded research project Immersify explored potential of cutting-edge VR technology and media for immersive single- and multiuser environments, including high-performance low-latency/live-streaming technology.

more...

[Non-Disclosed VW Project #2] (2017)

Interactive sketch of a future mobility vision, conceptualized and implemented for Volkswagen Future Centre Europe, Potsdam. Non-disclosed until 09/2022

[Non-Disclosed VW Project #1] (2017)

Interactive sketch of a future mobility vision, conceptualized and implemented for Volkswagen Future Centre Europe, Potsdam. Non-disclosed until 01/2022

ORI* w/ Matthew Gardiner (2016)

credit: Matthew Gardiner

credit: Matthew Gardiner

The art-based research project ORI* investigates utilization of computational origami, robotics, and material technology within the context of functional aesthetics and oribotics.

more...

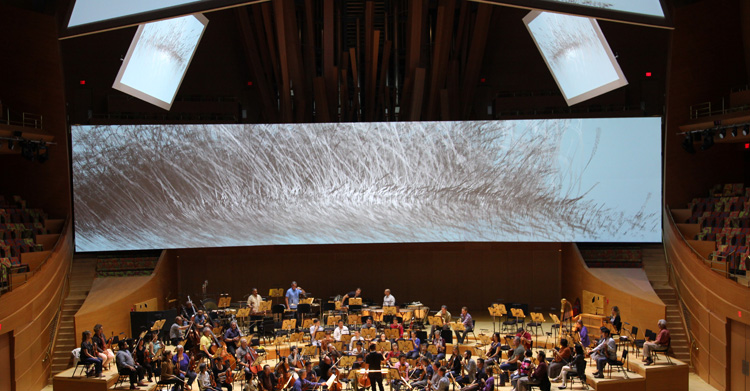

Ma mère l’Oye w/ LA Philharmonics & Bruckner Orchester (2017)

credit: Roland Aigner, Ars Electronica

credit: Roland Aigner, Ars Electronica

Premiered as the opening to the City of Light Festival at the Disney Hall in Los Angeles, Ma mère l’Oye was a multimedia spectacle with unique utilization of the Disney Hall’s space and architecture, including seven distributed projection displays for providing interactive/reactive visuals.

more...

Agent Unicorn w/ Anouk Wipprecht (2016)

credit: Vita, Local Androids

credit: Vita, Local Androids

Agent Unicorn is a head-worn device targeted for children suffering ADHD or autism. The incorporated Brain-Computer-Interface (BCI) tracks brain activity in realtime and triggers recordings via an embedded camera, which are used for self- and professional behavior analysis.

more...

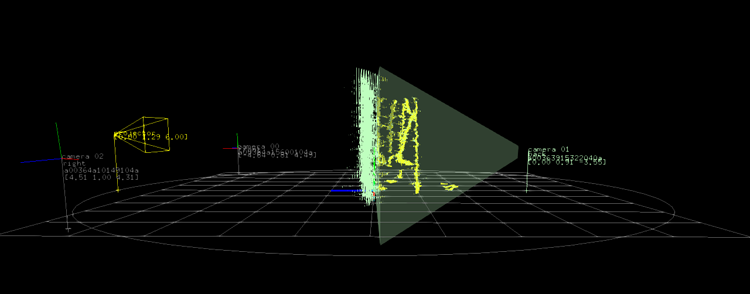

Cultural Heritage: Point Cloud VR Visualization (2015)

credit: Ars Electronica

credit: Ars Electronica

As a highlight of the Ars Electronica Center’s permanent Deep Space 8K program, the Cultural Heritage item includes immersive, true-to-life tours though cultural sites from all over the world, 3D-scanned and processed for stereoscopic display and interactive fly-through.

more...

[Non-Disclosed Audi Project] (2014)

Contracted research and development for Audi, in the area of driving simulation. Non-disclosed until further notice.

Murmur w/ Aakash Odedra (2013 – 2014)

credit: Sean Goldthorpe

credit: Sean Goldthorpe

Murmur is a multi-media packed dance performance by Aakash Odedra, created in close collaboration with the Ars Electronica Futurelab and choreographer Lewis Major, supported by the Arts Council England and awarded with the Sky Arts Academy Arts Scholarships and IdeasTap.

more...

Audi Virtual Engineering Terminal (2013 – 2014)

credit: Audi AG

credit: Audi AG

Developed for Audi’s Technical Development department, the Virtual Engineering Terminal is a modular multi-component media installation simulating and demonstrating driver assistance systems in virtual environments.

more...

[Non-Disclosed BMW Project #1] (2012)

Contracted research and development for BMW Forschungs- und Innovationszentrum (FIZ), Munich, with focus on collaborative automotive construction. Non-disclosed until further notice.

(St)Age of Participation w/ Klaus Obermaier (2011 – 2014)

credit: Claudia Schnugg, Ars Electronica

credit: Claudia Schnugg, Ars Electronica

A four-year art-based research endeavor, spawning several public media art performances investigating the impact of social media, user-generated content, and a culture of collaboration and co-creation within the context of stage-based performances.

more...

Cadet (2011 – 2013)

credit: Roland Aigner, Ars Electronica

credit: Roland Aigner, Ars Electronica

The Center for Advances in Digital Entertainment Technologies (CADET) was a collaboration of the Ars Electronica Futurelab with the University of Applied Sciences Salzburg. It was a joint effort to streamline immersive technologies for application in gaming and digital-entertainment (see Github project).

Fuwa-Vision (2011)

Research prototype of an interactive auto-stereoscopic display for multiple users. Fuwa-Vision is not requiring glasses nor mechanically moving components. A derivative concluded in an Emerging Technologies article and demo at SIGGRAPH Asia 2012.

Gesture Linguistics Research at NUS (2011)

As a follow-up to the internship at Microsoft Research, a study of human preferences in using mid-air gestures spawned a taxonomy of gesture types, in order to categorize gestures with respect to HCI relevant commands and tasks. From a comprehensive elicitation study, gesture type preferences with respect to specific fine-granular actions were identified and presented in a Technical Report, freely available via Microsoft Research.

[Non-Disclosed Microsoft Research Internship] (2010 – 2011)

Microsoft’s release of the Kinect gaming controller in 11/2011 marked the advent of low-cost depth sensing cameras, providing an affordable means for realtime 3D sensing to a vast number of researchers, developers, artists, and tinkerers. It was the starting shot for the industry creating numerous similar devices and certainly posed new challenges to user interface designers. An internship at Microsoft Research was dedicated to new and holistic ways of gesture language interpretation and design.

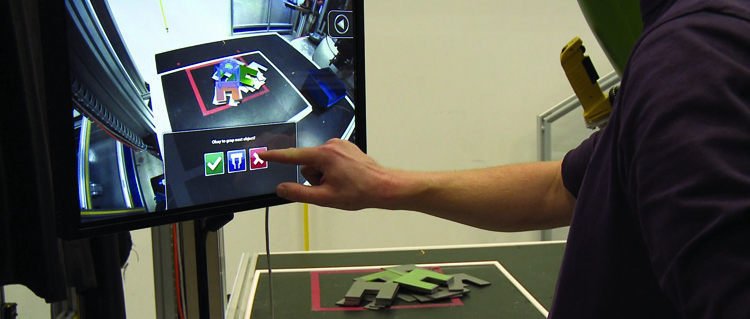

Augmented Reality Assisted Multimodal Control for Robotic Arm (2009 – 2010)

credit: Roland Aigner, University of Applied Sciences Upper Austria

credit: Roland Aigner, University of Applied Sciences Upper Austria

This Master Thesis explores a novel interface for command-and-control of robotic arms, especially targeted for use cases where speed outweighs precision requirements. A multi-modal interface was created, which combed multitouch input with a command-based speech interface and 6-DOF hand pose tracking for live-control of the robotic arm.

more...

Augmented Based Human Robot Interaction (2009 – 2010)

credit: ProFactor GmbH

credit: ProFactor GmbH

The objective of the FFG funded research project AHUMARI was to explore new human-robot interaction methods for controlling and programming industrial robots, including presentation of relevant process data which is usually hidden to unknown to novices. Multi-modal programming and operation methods were evaluated, including optically tracked 3D sticks and speech input, while in-situ process information was provided via spatial and display based Augmented Reality techniques.

more...

Low-Latency WAN Streaming for Server Based Rendering (2008)

credit: RTT AG

credit: RTT AG

Research and implementation of a proof-of-concept prototype, performed during an internship at the Munich based high fidelity computer graphics specialist RTT AG (now 3DExcite GmbH) laid the foundation for RTT PowerHouse, released in 2009, an application for low-latency streaming of interactive server-based raytracing imagery.

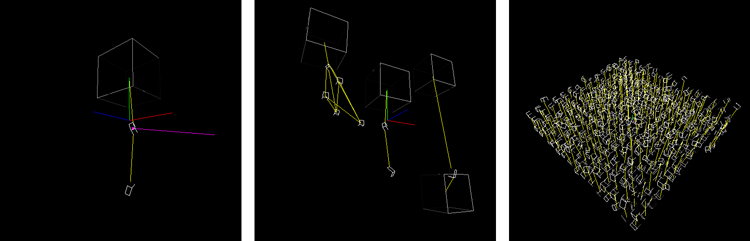

Dynamics Simulation Engine for Realtime Applications (2008)

CPU-based physics engine for particle and rigid body dynamics simulation, for objectively comparing precision and performance tradeoffs when using different integrators, which was developed along a Bachelor Thesis.

Xenowing (2005)

credit: funworld ag

credit: funworld ag

Action-packed puzzle game, developed for touchscreen-based PhotoPlay gaming terminal.

Damage (2004)

credit: funworld ag

credit: funworld ag

Highly popular puzzle game, developed for touchscreen-based PhotoPlay gaming terminal.

Bubble Shooter (2004)

credit: funworld ag

credit: funworld ag

Popular puzzle game, developed for touchscreen-based PhotoPlay gaming terminal, introducing some features quite unconventional for touchscreen game terminals of the era.

Rumbling Marbles (2003)

credit: funworld ag

credit: funworld ag

Highly popular puzzle game, developed for touchscreen-based PhotoPlay gaming terminal.

Fragile (2000)

credit: funworld ag

credit: funworld ag

Popular puzzle game, developed for touchscreen-based PhotoPlay gaming terminal.

© copyright 2025 | rolandaigner.com | all rights reserved

credit: University of Applied Sciences Upper Austria

credit: University of Applied Sciences Upper Austria

credit: University of Applied Sciences Upper Austria

credit: University of Applied Sciences Upper Austria

credit: University of Applied Sciences Upper Austria

credit: University of Applied Sciences Upper Austria

credit: Tom Mesic

credit: University of Applied Sciences Upper Austria

credit: SAP SE

credit: Vanessa Graf

credit: OÖ Theater und Orchester GmbH

credit: Tom Mesic

credit: Ars Electronica

credit: Matthew Gardiner

credit: Roland Aigner, Ars Electronica

credit: Vita, Local Androids

credit: Ars Electronica

credit: Sean Goldthorpe

credit: Audi AG

credit: Claudia Schnugg, Ars Electronica

credit: Roland Aigner, Ars Electronica

credit: Roland Aigner, University of Applied Sciences Upper Austria

credit: ProFactor GmbH

credit: RTT AG

credit: funworld ag

credit: funworld ag

credit: funworld ag

credit: funworld ag

credit: funworld ag